Where they were once referred to in terms of a far-off idea more suited to an episode of The Jetsons than real life, the reality of vehicles which can drive themselves is getting frighteningly close.

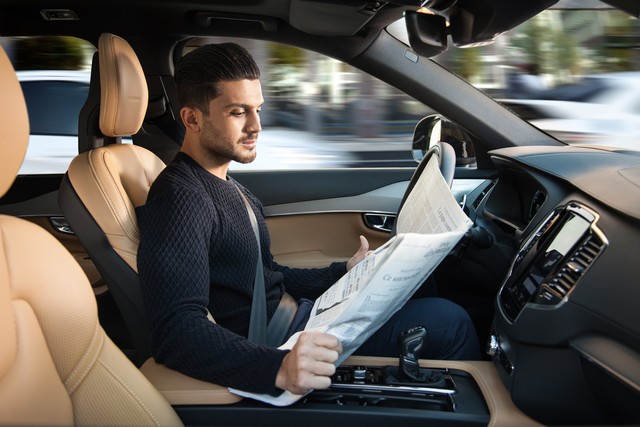

Although they aren’t on our roads quite yet, autonomous technology is becoming more and more integrated, with several locations in the UK now actively testing driverless pods, Google and Volvo hard at work realising their concepts, and Tesla introducing driverless updates for its Model S.

Yet amongst the din of tech firms busy forging ahead with the latest and greatest in autonomous technologies, there stands a lone voice that’s saying: “Slow down”.

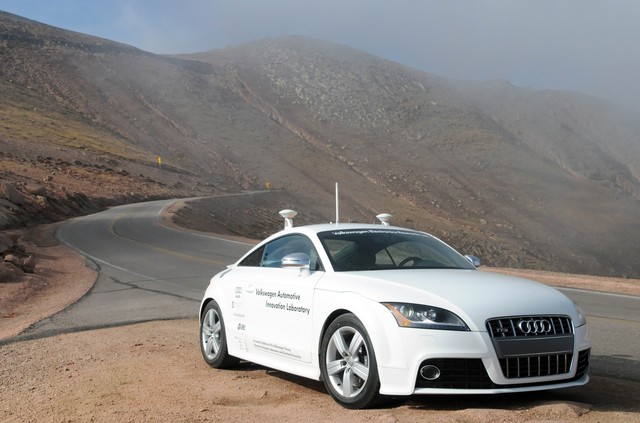

That voice belongs to Stanford University engineering professor Chris Gerdes, who has long worked on programming cars to drive themselves autonomously around race tracks. He and his students at Stanford famously programmed an Audi, affectionately named Shelly, to flawlessly make the 153 turns that make up the 12.4-mile Pikes Peak trail in Colorado, with no one at the wheel.

Raised in North Carolina near the Charlotte Motor Speedway, Gerdes has been at the forefront of the driverless car movement since its inception, monitoring the brains of top racing drivers and creating cars which imitate them.

In spite of being one of the most enthusiastic proponents of autonomous car technology, however, he’s recently become the cause of a great deal of discomfort to both car manufacturers and tech companies.

A man who’s regarded as the “Switzerland” of the car industry, its neutral voice according to philosophy professor Patrick Lin, Gerdes believes there’s still a lot of wrinkles to be ironed out, even if the technology is there. As he puts it, “there remains “a lot of subtle, but important things yet to be solved.”

Central to Gerdes’ stance is the necessity to explore the ethics of driverless technology, which must inevitably be programmed into the robotic minds of cars that are expected to one day be soon driving on their own around the country’s motorways.

Professor Lin said: “Within the autonomous driving industry, Chris is regarded as Switzerland, he’s neutral. He’s asking the hard questions about ethics and how it’s going to work. He’s pointing out that we have to do more than just obey the law.”

Such is the importance of Gerdes’ expertise, chief executives from Tesla, General Motors and Ford have recently been meeting at his lab in order to run their ideas and concepts past him. His message: not so fast.

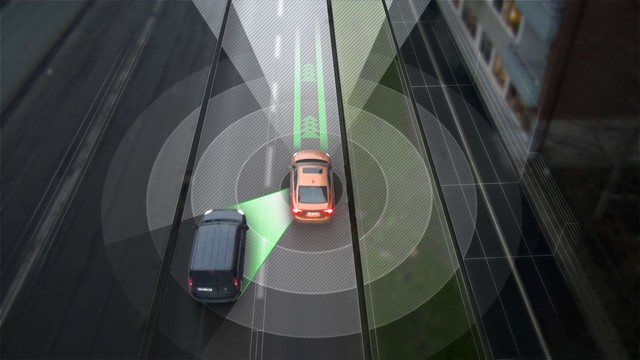

One experiment conducted by Gerdes and his students involved an autonomous dune buggy, which was programmed to try and navigate a set of traffic cones that simulated a construction team working over a manhole.

The buggy is faced with a choice: it can either obey the law against crossing double yellow lines and hit the cones, or break the law and spare the lives of the traffic cone “workers”. After a bit of deliberation, it split the difference by veering at the last possible moment and nearly hitting the cones.

According to Gerdes, problems like these are exactly the kind of ethical considerations that driverless cars will encounter on a regular basis in the real world. It’s clear that the car should have crossed the lines to avoid the crew, but it’s less clear how to go about programming a machine to make complex ethical calls, or to decide when to break the law.

“We need to take a step back and say, ‘Wait a minute, is that what we should be programming the car to think about? Is that even the right question to ask?’” he told Bloomberg. “We need to think about traffic codes reflecting actual behaviour to avoid putting the programmer in a situation of deciding what is safe versus what is legal.”

Initially, Gerdes said that he was dismissive of the importance of philosophy and driverless cars. In his view, vehicles programmed with robot reflexes could drastically reduce highway deaths, so wasn’t that moral enough?

However, he soon came to see both the significance of robot ethics, and its painful complexity. For example, when an accident is unavoidable, should a driverless car aim for the smallest object to protect its occupant? What if that object was a baby’s pram? Does it owe more to its passengers than it does to others?

If human drivers face impossible dilemmas, split-second decisions have to be made in the heat of the moment and it can be forgiven. But if a machine is specifically programmed to make a choice, who’s to blame?

“It’s important to think about not just how these cars will drive themselves, but what’s the experience of being in them and how do they interact,” Gerdes said. “The technology and the human really should be inseparable.”

As a child, Gerdes’ uncle once designed a race car for Mario Andretti on behalf of Chrysler, while he once also spent an afternoon chatting with an up-and-coming Dale Earnhardt Sr, one of the most famed NASCAR drivers of all time.

When he wasn’t obsessing over cars, he read Isaac Asimov’s science fiction novels, which became the rulebook for robots and artificial intelligence that ethicists still use to this day. Asimov’s first rule: An autonomous machine may not injure a human being or, through inaction, allow a human to be harmed.

At 46-years old, Gerdes is currently in training to earn his racing licence, and once a month takes his crew to the Thunderhill Raceway to test the very limits of autonomy. As part of his teaching method, he requires all his students to drive hot laps, as to fully automate driving, he believes it needs to be understood at its most extreme.

Despite the difficult ethical considerations, he remains unperturbed as later this month he and his team are due to unveil a self-driving DeLorean DMC-12, which they’ve nicknamed Marty.

“With any new technology, there’s a peak in hype and then there’s a trough of disillusionment," he said. “We’re somewhere on that hype peak at the moment. The benefits are real, but we may have a valley ahead of us before we see all of the society-transforming benefits of this sort of technology.”