Autonomous transportation is currently all the rage in the tech world, with virtually every car manufacturer now making some sort of effort to bring cars which require no driver input to market.

So far, the majority of the technology is fairly inoffensive and innocuous: systems which can automatically brake if an imminent collision is detected, or intelligent cruise control. As more of it becomes standard, however, manufacturers are starting to push the boat out even further.

For example, Tesla’s new Autopilot feature, which allows any Tesla car outfitted with it to automatically drive itself with no help from the driver along a main road or motorway, was released last week.

Audi also now offers a system that allows your car to pilot itself while you’re stuck in a traffic jam, and which can even decide to change lanes on your behalf if it thinks there’s a quicker route.

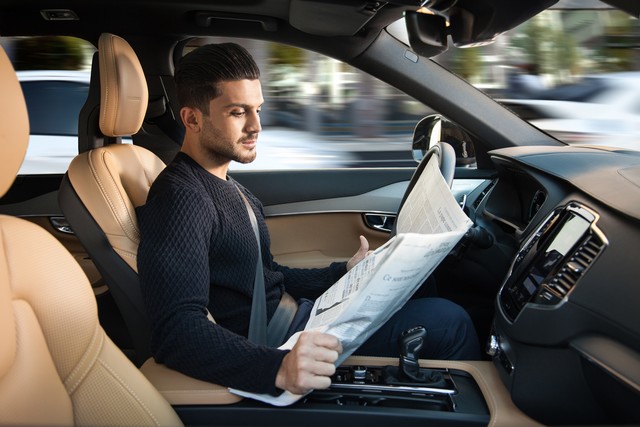

The end goal is to end up with vehicles which can successfully navigate roads to drive their occupants around, without the driver or any of the passengers having to have any input whatsoever.

It sounds like a fun idea, something ripped straight from an episode of The Jetsons, where people wake up in the morning and jet off to work in their autonomous robot car. However, the reality is much more ethically challenging.

What, for example, would happen if the car gets into difficulty? How should it be programmed to act in the event of an unavoidable accident? Should it minimise loss of life, even if it means sacrificing its own occupants? Should it prioritise one life over another, depending on circumstances?

The reality is this: driverless cars must be programmed to be able to make the decision to kill people.

It’s a subject which has troubled Stanford University professor Chris Gerdes, who has worked for years now developing computer programs which allow cars to drive themselves on autopilot, including a robotic drifting DeLorean affectionately named MARTY.

One experiment conducted by Gerdes and his students at the university involved an autonomous dune buggy that was programmed to navigate a set of traffic cones that simulated a construction team working over a manhole.

The buggy is then faced with a choice: it can either obey the law again crossing a set of double yellow lines and hit the cones, or break the law and spare the lives of the workers. After some deliberation, the car split the difference by veering at the last possible moment and nearly hitting the cones.

According to Gerdes, problems like these are exactly the kind of ethical considerations that driverless cars will encounter on a regular basis in the real world. It’s clear that the car should have crossed the lines to avoid the crew, but it’s less clear how to go about programming a machine to make complex ethical calls, or to decide when to break the law.

It gets more complex still; faced with a scenario where a driverless car is forced to choose between driving itself off a cliff to avoid hitting a baby’s pram, or ploughing through the pram to save its occupant, which route should it take?

Questions like this aren’t just a matter of ethical discussion, either. Ultimately, they could have a massive impact on the way that self-driving vehicles are accepted in society; who would buy a car that’s been programmed to sacrifice its owner?

According to Jean-Francois Bonnefon of the Toulouse School of Economics, there is no right or wrong answer to these questions. Public opinion will play a strong role in how, or even if, self-driving cars ever become accepted.

Bonnefon and his team set out to see how people respond to some of the ethical dilemmas that face the cars. They did this to understand what the public thinks and how they respond to the challenges. The idea, the team says, is that the public is more likely to go along with something which aligns with their own viewpoint.

Their scenario involves a situation where a self-driving car is forced into the path of 10 pedestrians crossing the road. It can’t stop in time, but in can avoid killing the pedestrians by steering into a wall; however, the collision will kill its driver and two occupants inside. What should it do?

One approach would be to minimise the loss of life by steering into the wall and thereby killing three people instead of 10. However, that approach might have other consequences: if less people buy self-driving cars because they tend to kill their owners, more people are statistically likely to die because ordinary cars driven by human drivers are involved in so many more accidents.

The researchers also varied some of the details, which included the number of pedestrians that could be saved, whether the driver or the car’s computer made the decision to swerve, and whether the participants were asked to imagine themselves as a passenger or as an anonymous bystander.

Bonnefon’s results were interesting, if a little predictable. According to the research, the majority of people were comfortable with the idea that self-driving vehicles should be programmed to minimise the death toll, even at the expense of their owners.

However, they were only willing to go so far. The study added: “Participants were not as confident that autonomous vehicles would be programmed that way in reality—and for a good reason: they actually wished others to cruise in utilitarian autonomous vehicles, more than they wanted to buy utilitarian autonomous vehicles themselves.”

Therein lies the paradox of the situation; people want cars that sacrifice their occupants to save others’ lives, so long as they don’t have to drive one themselves.

The researchers’ work represents only the first few initial steps into what’s likely to be an incredibly complex moral maze. Any number of issues will need to be factored in and accounted for before driverless cars ever reach the mass market.

Should an autonomous vehicles avoid a motorcycle by swerving into a wall given that it’s more likely the car will survive than the motorcycle? Should it change its rules when children are on board, or if a manufacturer builds a different algorithm is it responsible for harmful outcomes of the car’s decisions?

Earlier this month, a report from US-based consulting firm McKinsey & Company claimed that driverless cars could reduce on-road fatalities by 90 per cent. What happens for the rest of the 10 per cent, however, is likely to forever be the subject of debate.